We were tasked to read a study done on search tools and discovery platforms and replicate the study on a much smaller scale in groups for my Reference and Research class, LIS 2500.

Here were the instructions: LIS 2500 Disc_Assignment_revised

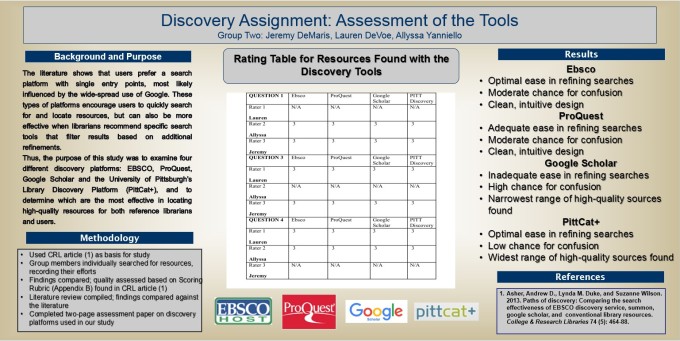

For a close up: group 2 assessment poster_final

You can listen to our presentation at: http://www.screencast.com/t/XThtWyQm

And our paper:

Introduction:

Group Two was tasked with comparing the efficacy of different search platforms in order to gain a greater understanding of the reference process and the tools available to aid in a reference interview. The group looked at four separate search platforms and compared those searches in order to draw conclusions about the strengths and weaknesses of each. Using a previously published study as a basis for our study (see appendix B for information related to this article), this assessment was created to present our conclusions on the four search platforms used: the University of Pittsburgh’s Library Discovery Platform (PittCat+), EBSCO, ProQuest and Google Scholar.

Background:

Reference services are complex and the tools available for use are many. Libraries offer various search platforms to aid students in finding the right scholarly resources to meet their research needs. Choosing what search platform is best can be daunting for students. However, each search tool also offers a variety of services in order to narrow down results. The library discovery platform gathers all the libraries’ available resources and offers them in one place, allowing students to sort through thousands of offerings. Both EBSCO and ProQuest focus on the resources that those companies publish, which narrows a search down significantly, while Google Scholar searches the Google Databases and offers access to possibly millions of resources. In looking at these search platforms, Group Two looked for which platforms would offer resources that met specific requirements in order to best answer their research questions, using a CRL article (Asher, Duke, and Wilson 2013) as the basis for this process.

Literature Review:

Discovery platforms exist to make research more easily searchable and to help in smoothing out issues in the reference process. By reviewing several articles that examined the efficacy of search platforms, Group Two came to several conclusions. Discovery tools aid users in completing search scenarios and “maximize resource use, minimize student frustration, and ensure libraries’ pivotal role in information use and retrieval” (Foster and MacDonald 2013, 2). Asher, Duke and Wilson concluded in the CRL article which formed the basis of this assessment that “One of the most powerful features of discovery tools is their ability to meet students’ expectations of a single point of entry for their academic research activities supported by a robust and wide-ranging search system” (Asher, Duke, and Wilson 2013, 476). The topic of how Google was used by students when library search platforms are readily available was also a major point of discussion. Mandi Goodsett stated that “Increasingly, libraries find themselves competing for the attention of students with big search engines such as Google and Google Scholar”, which leads to the adoption of Discovery Tools that employ a single search point (Goodsett 2). Google has rapidly changed the face of library searches by forcing libraries to adapt similar looking platforms. Discovery tools appeal to users and more patrons are willing to use discovery platforms with single entry points. While librarians often criticize discovery platforms, all articles read agreed that discovery tools encourage patron use. But choosing discovery platforms can be time consuming and all articles seemed to point out that when librarians could direct patrons to using specific discovery tools, users had better experiences. In general, discovery platforms make the research process easier and most users seem to prefer the main library discovery tool to all else, since it can retrieve results from all items, types and vendors.

Methodology:

In order to create this assessment, Group Two was first asked to read an article which performed a similar study. After reading the article and discussing issues the group had in understanding the article’s study and result, the group was tasked with answering different questions using various search platforms. Each group member created a recording of their search efforts and narrated their thoughts while they did so. Once all searches had been performed, the group as a whole compared the various platforms to reach their conclusions. The group then performed a literature review and compiled this assessment after looking at our own results and comparing them to the literature read. Using the ranking system found in the CRL article (Asher, Duke, and Wilson 2013), the group ranked the articles each member referenced and created the chart found in Appendix A.

Results and Analysis:

While all four discovery platforms used returned articles that met the criteria, the library discovery platform was the group’s favorite search tool. This tool allowed users to refine their searches in a logical manner, while providing the largest number of useful results with the least amount of confusion. The platform performed a search that brought back the widest range of useful resources.

Google Scholar was the group’s least favorite tool. The Advanced Scholar Search portal did not allow as many useful refinements to searches and often provided too many results…in some cases, Group Two was not able to gain access to resources. In other cases, Google Scholar yielded search topics that were not useful and had to be culled out before search results became useable. Also not offered were tools to help patrons ascertain which resources were scholarly, peer reviewed articles. It was agreed that Google Scholar proved the least useful and perhaps the most overwhelming. Group Two would not recommend it to library patrons.

While EBSCO provided quick search results that were easily refined, sorting through its large list of databases to pare the search down to the right topics became overwhelming. However, the EBSCOhost Advanced Search interface is clean, intuitively designed, and easy to read. The available parameters provide a number of useful options to choose from when conducting research without flooding the user with too many options.

In our opinion, ProQuest’s refinements weren’t as adequate as EBSCO’s or the library discovery tool, though they were still better than Google Scholar’s. While the Advanced Search interface is intuitively designed and uncluttered, some of us felt that the Document type selection field provided too many available options to be useful for general research.

While EBSCO and ProQuest offered decent search options, Group Two found that using the library discovery tool easily incorporated both databases, and many of the same resources were found.

References:

Asher, Andrew D., Lynda M. Duke, and Suzanne Wilson. 2013. Paths of discovery: Comparing the search effectiveness of EBSCO discovery service, summon, google scholar, and conventional library resources. College & Research Libraries 74 (5): 464-88.

Chickering, F. William, and Sharon Q. Yang. 2014. Evaluation and comparison of discovery tools: An update. Information Technology and Libraries 33 (2): 5.

Foster, Anita K., and Jean B. MacDonald. 2013. A tale of two discoveries: Comparing the usability of summon and EBSCO discovery service. Journal of Web Librarianship 7 (1): 1-19.

Goodsett, Mandi. 2014. Discovery search tools: A comparative study. Reference Reviews 28 (6): 2-8.

Appendix A:

| QUESTION 1 | Ebsco | ProQuest | Google Scholar | PITT Discovery |

| Rater 1

Lauren |

N/A | N/A | N/A | N/A |

| Rater 2

Allyssa |

3 | 3 | 3 | 3 |

| Rater 3

Jeremy |

3 | 3 | 3 | 3 |

| QUESTION 3 | Ebsco | ProQuest | Google Scholar | PITT Discovery |

| Rater 1

Lauren |

3 | 3 | 3 | 3 |

| Rater 2

Allyssa |

N/A | N/A | N/A | N/A |

| Rater 3

Jeremy |

3 | 3 | 3 | 3 |

| QUESTION 4 | Ebsco | ProQuest | Google Scholar | PITT Discovery |

| Rater 1

Lauren |

3 | 3 | 3 | 3 |

| Rater 2

Allyssa |

3 | 3 | 3 | 3 |

| Rater 3

Jeremy |

N/A | N/A | N/A | N/A |

Videos and Article Titles:

Jeremy DeMaris:

Library: http://www.screencast.com/t/I74dqXSEt

- The effect of volcanic eruptions on global precipitation

- Updated historical record links volcanoes to temperature changes

EBSCO: http://www.screencast.com/t/5r2MkJRyw7

- Clarifying volcanic impact on global temperatures

- Impacts of high-latitude volcanic eruptions on ENSO and AMOC

Google Scholar: http://www.screencast.com/t/ZMYv8rBj6

- Atmospheric CO2 response to volcanic eruptions: the role of ENSO, season, and variability

- The effect of volcanic eruptions on global precipitation

ProQuest: http://www.screencast.com/t/dwjsRYl1Zj7

- Impact of an extremely large magnitude volcanic eruption on the global climate and carbon cycle estimated from ensemble Earth System Model simulations

- Volcanic contribution to decadal changes in tropospheric temperature

Comments: Allyssa – I scored 3 on all of them. None of the materials were out of date, sufficient context was provided, and the articles directly addressed the research question in each case (in my subjective opinion). Lauren – I scored a 3 for the same general criteria: each source directly addressed the question, I believe they would be adequate for use in an academic setting, they’re up-to-date, and also appropriate for general presentations, in my opinion. Lauren also made some good observations about the appropriateness of particular resources which showed she was thinking about the question-specific requirement for this topic.

Allyssa Yanniello:

EBSCO: http://www.screencast.com/t/Aa3aBJxCxJV

- Poor and distressed, but happy: situational and cultural moderators of the relationship between wealth and happiness.

- Money Giveth, Money Taketh Away: The Dual Effect of Wealth on Happiness.

Google Scholar: http://www.screencast.com/t/hhVLD5lJ

- High Income improves evaluation of life but not emotional well-being

- Wealth and Happiness Across the World: Material Prosperity Predicts Life Evaluation, Whereas Psychosocial Prosperity Predicts Positive Feeling

ProQuest: http://www.screencast.com/t/W3avEXHEnI9

- Resolution of the Happiness-Income Paradox

- Does Being Well-Off Make Us Happier? Problems of Measurement

- Income inequality is associated with stronger social comparison effects: The effect of relative income on life satisfaction

Library: http://www.screencast.com/t/5jGiILhcPl

- Income growth and happiness: reassessment of the Easterlin Paradox

- Richer in Money, Poorer in Relationships and Unhappy? Time Series Comparisons of Social Capital and Well-Being in Luxembourg

Comments: Jeremy – EBSCO 3, ProQuest 3, Google Scholar 3, PITT Discovery 3. They all hit at least 5 of the 6 rubric points. Lauren – EBSCO 3, ProQuest 3, Google Scholar 3, PITT Discovery 3. However, I do want to say I thought what Lauren said about Google Scholar was very true; the tool is not very good at narrowing down topics, as it does not have an advanced search option. So some of the options were not good for a general project. However, all of the resources hit at least 5 out of 6 rubric points as well.

Lauren DeVoe:

Library: http://www.screencast.com/users/ldevoe/folders/Default/media/6706e4e8-581d-457f-b9ed-356f31059364

- The civil rights act of 1964 at 50: Past, present, and future.

- The origins and legacy of the civil rights act of 1964.

- Civil rights act of 1964

EBSCO: http://www.screencast.com/users/ldevoe/folders/Default/media/33e5c4f7-0d66-4b41-85ff-562e4da7dff3

- THE SUPREME COURT’S PERVERSION OF THE 1964 CIVIL RIGHTS ACT

- Going Off the Deep End: The Civil Rights Act of 1964 and the Desegregation of Little Rock’s Public Swimming Pools.

ProQuest: http://www.screencast.com/users/ldevoe/folders/Jing/media/460b2947-83cd-48c6-aed9-d0e044429669

- Reinterpretations of Freedom and Emancipation, Civil Rights and Assimilation, and the Continued Struggle for Social and Political Change

- The 1964 Civil Rights Act: Then and Now

- The First Serious Implementation of Brown: The 1964 Civil Rights Act and Beyond

Google Scholar: http://www.screencast.com/users/ldevoe/folders/Jing/media/40c09666-6a9e-44ea-a8b8-fcc2777a6890

- Local Protest and Federal Policy: The Impact of the Civil Rights Movement on the 1964 Civil Rights Act.

- Public Accommodations under Civil Rights Act of 1964: Why Freedom of Association Counts as a Human Right.

Comments: Jeremy – Library 3, EBSCO 3, ProQuest 3, Google Scholar 3, Each resource fit the criteria and met the rubric for appropriateness. I thought that Jeremy’s look at Google Scholar pointed out many of the issues inherent and though Jeremy found good resources, it was obvious that there were issues with the platform. Allyssa – Library 3, EBSCO 3, ProQuest 3, Google Scholar 3, all materials met the necessary requirements.

Appendix B

What is the goal of the CRL study?

The goal of this CRL study was to judge the efficacy and performance of a few different discovery tools, and to understand how users (in this instance, undergraduate students) utilize these tools to perform research. In addition, the authors of the study were interested in identifying how students approach research problems and what assumptions they make about search/discovery tools (i.e. – whether or not to trust the results returned by a specific tool) in order to help students become better researchers, allow librarians and educators to provide proper instruction, and help users understand how specific discovery tools work to obtain the highest quality resources available. The researchers looked at both qualitative and quantitative data in order to reach their conclusions on efficacy within the use of these search platforms. By focusing on how these platforms were experienced by the users, the study was also striving to “identify and address unmet student needs and instructional requirements” by studying how students use these discovery platforms (Asher, Duke, and Wilson 2013, 465). The authors also wanted this study to be more comprehensive, which is why it looks at more than one discovery platform: “This study seeks to move beyond technical issues and single-tool evaluations to make a more comprehensive investigation that compares how students use different search tools and the types of materials they discover during their searches” (Asher, Duke and Wilson 2013, 466). The study sought to understand why students chose to use certain discovery tools over others, and understand how librarians can better help students who come into the library find the best resources possible through the tools that are currently being used.

How will this CRL article relate to this Discovery assignment?

The discovery assignment is similar to this study, as we are trying to compare the efficiency of different search platforms. Two out of the four platforms we will be examining are also discussed in the study, EBSCO Discovery platform and Google Scholar. Some of the study methods outlined in the CRL article (i.e. – answering research questions using different discovery tools and judging the quality of selected resources) reflect processes we will be involved in as part of the discovery assignment. We’ll also be assessing the tools we use and providing a test case outline similar to the one utilized in the CRL study (i.e. – intro, background, methodology, analysis, results, etc.). This study asked students to evaluate different search platforms with set questions, just as this assignment will. Essentially it seemed that the CRL study was a larger version of this assignment. This assignment seems to encourage a similar process in how this class looks at discovery platforms and judges how to best research a reference questions.

What is the “muddiest point” or main idea that you didn’t understand in the CRL study?

Group 2 did not understand how correlations were calculated for the judgments of each rater using the Spearman’s Rho formula. The purpose of using such a formula was apparent, but it would help to understand how the numbers are generated in order to grasp or ‘visualize’ the derived correlations for each question in order to contextualize this step better. Also, when judging the overall quality of resources generated by each tool and comparing them to each other, the authors talked about ‘statistical significance’ (whether the higher overall score received by one tool compared to that of another tool was statistically significant), but we weren’t able to see any information in the body of the text or in the appendices to help us determine what ratio they were using to determine ‘statistical significance’ (i.e. – a mean score that is 1 point higher than another’s mean score?…or something else?).

So a list of questions that should be asked:

What is Spearman’s Rho?

How is it calculated?

Why was it used instead of a different formula?

What other formulas could be used in a study like this?

Where would we find those and actually implement them in our own projects?

What is meant by statistical significance in this study?

What statistics were being used? Or how were they calculated?

What type of statistics should we be looking at in our own research? Where would we go to find this kind of statistic?

Reference:

Asher, Andrew D., Lynda M. Duke, and Suzanne Wilson. 2013. “Paths of Discovery: Comparing the search effectiveness of EBSCO Discovery Service, Summon, Google Scholar, and Conventional Library Resources.” College and Research Libraries 74, no.